Necessary Condition Analysis (NCA)

Abstract

The necessary condition analysis (NCA) is a data analysis technique for identifying necessary (but not sufficient) conditions in data sets. It complements traditional regression-based data analysis including partial least squares structural equation modeling (PLS-SEM) as well as methods like qualitative comparative analysis (QCA).

Brief Description

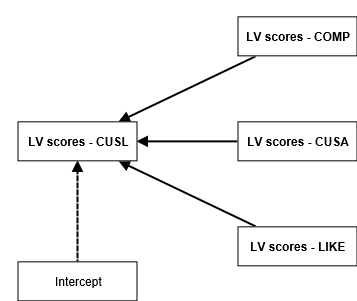

Originally developed by Dul (2016), the necessary condition analysis (NCA) is a relatively new approach and data analysis technique that enable the identification of necessary conditions in data sets (Bokrantz & Dul, 2023; Dul, 2020). It can be used for regression models and partial least squares structural equation modeling (PLS-SEM), as explained in detail by Richter et al. (2020) and Richter et al. (2023a) -- see also Hair et al., (2024) and Richter et al. (2023b). SmartPLS fully supports the NCA for regression models (i.e., for regression models via the algorithm Necessary condition analysis (NCA)). By using the unstandardized latent variable scores or the IPMA latent variable scores on a scale from 0 to 100, it is possible to run the NCA in PLS-SEM for the partial regression models of the structural model (see the figure below).

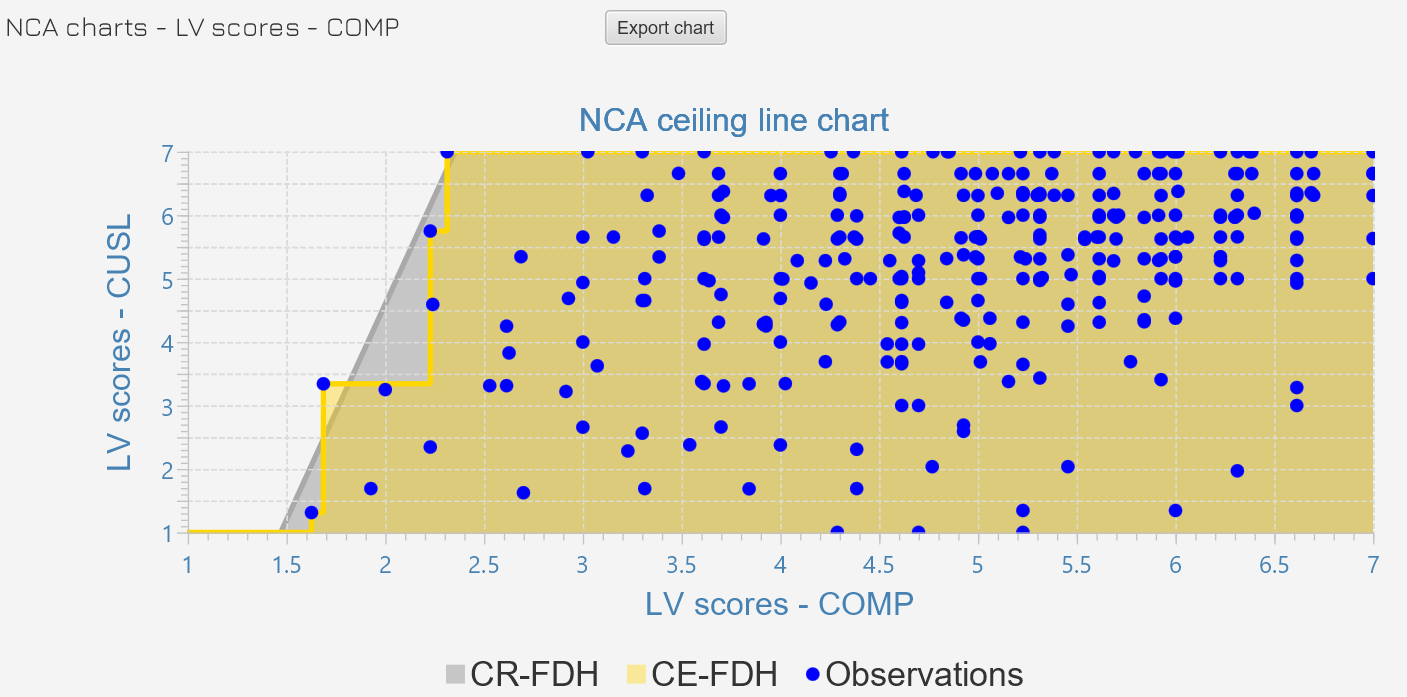

Instead of analyzing the average relationships between dependent and independent variables, NCA aims to reveal areas in scatter plots of dependent and independent variables that may indicate the presence of a necessary condition. While ordinary least squares (OLS) regression-based techniques establish a linear function in the center of the relevant data points, NCA determines a ceiling line on top of the data. The figure below shows two default ceiling lines: (1) The ceiling envelopment - free disposal hull (CE-FDH) line, which is a non-decreasing step-wise linear line (step function); and (2) the ceiling regression - free disposal hull (CR-FDH) line, which is a simple linear regression line through the CE-FDH line.

The ceiling line separates the space with observations from the space without observations. The larger the empty space, the larger the constraint that X puts on Y. The ceiling line also indicates the minimum level of X that is required to obtain a certain level of Y. This NCA outcome differs from the interpretation of linear regression where an increase of X leads, on average, to an increase of Y.

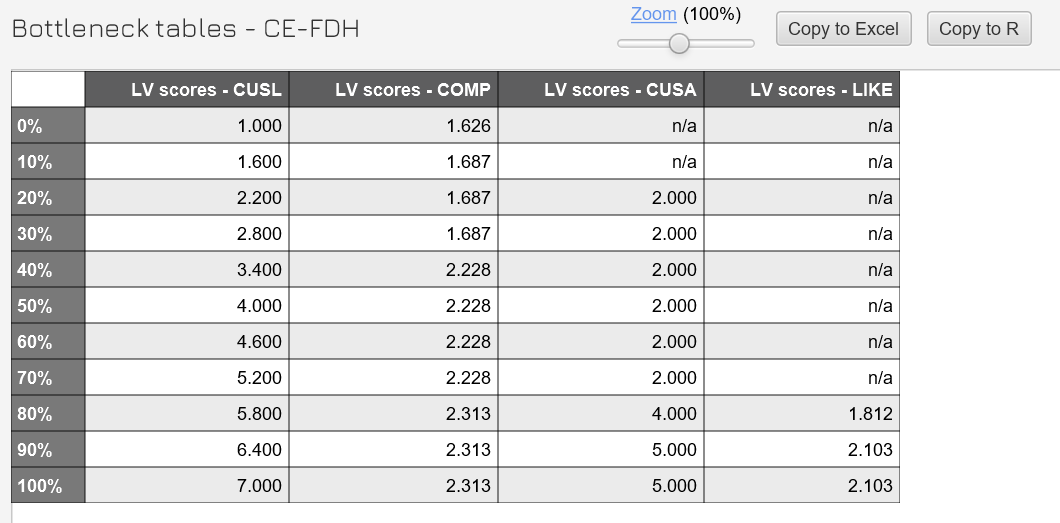

Alternatively, the bottleneck table presents the ceiling line results in a tabular form (see figure below). The first column of the table shows the outcome, whereas the next column represents (and additional columns represent) the condition(s) that must be satisfied to achieve the outcome. The results of both the outcome and the condition(s) may refer to the actual values, percentage values of the range, and percentiles.

Two key NCA parameters are the ceiling accuracy and necessity effect size d. The ceiling accuracy represents the number of observations that are on or below the ceiling line divided by the total number of observations, multiplied by 100. While the accuracy of the CE-FDH ceiling line is per definition 100%, the accuracy of the other lines, for instance the CR-FDH, can be less than 100%. There is no specific rule regarding the acceptable level of accuracy. However, a comparison of the estimated accuracy with a benchmark value (e.g., 95%) can assist to assess the quality of the solution generated. The necessity effect size d and its statistical significance indicate whether a variable or construct is a necessary condition. d is calculated by dividing the 'empty' space (called the ceiling zone) by the entire area that can contain observations (called the scope). Thus, by definition, d ranges between 0 ≤ d ≤ 1. Dul (2016) suggested that 0 < d < 0.1 can be characterized as a small effect, 0.1 ≤ d < 0.3 as a medium effect, 0.3 ≤ d < 0.5 as a large effect, and d ≥ 0.5 as a very large effect. However, the absolute magnitude of d is only indicative of the substantive significance, that is the meaningfulness of the effect size from a practical perspective. Therefore, NCA also enables researchers to evaluate the statistical significance of the necessity effect size yielded by a permutation test, which should also be considered when deciding about a necessity hypothesis (Dul, 2020). SmartPLS support the permutation-based significance testing of the effect sizes d (i.e., for regression models via the algorithm NCA permutation).

NCA Settings in SmartPLS

The Necessary condition analysis (NCA) algorithm for regression models in SmartPLS only requires a single parameter setting, which is the Number of steps for bottleneck tables. Thereby, you can specify the number of steps into which the dependent variable is divided for NCA's bottleneck tables. The default value is 10, which divides the displayed results into 10% steps from 0% to 100%. However, you may also choose a more detailed result display. For example, a value of 20 divides the results into 5% steps from 0% to 100%.

Moreover, when executing the NCA, make sure that the scale of your indicators is as expected. For example, an interval scale from 1 to 7 should show the minimum value of 1 and the maximum value of 7 (e.g., this may not be the case if no respondent selected the 1). This is important to ensure the accuracy of results.

##NCA Permutation Settings in SmartPLS

The NCA permutation algorithm for regression models in SmartPLS requires setting that are described in the following.

Subsamples

The number of permutations determines, how often the overall dataset is randomly permuted into the two groups to approximate the non-parametric reference distribution for the null hypothesis.

To ensure stability of the results, the number of permutations should be sufficiently large. For a quick initial assessment, one may choose a smaller number of permutation subsamples (e.g., 1,000). For obtaining the final results, however, one should use a large number of permutations (e.g., at least 5,000).

Do parallel processing

If chosen the bootstrapping algorithm will be performed on multiple processors (if your computer offers more than one core). As each subsample can be calculated individually, subsamples can be computed in parallel mode. Using parallel computing will reduce computation time.

Significance level

Specifies the significance level of the test statistic.

Random number generator

The algorithm randomly generates subsamples from the original data set, which requires a seed. You have the option to choose between a random seed and a fixed seed.

The random seed produces different random numbers and therefore results every time the algorithm is executed (this was the default and only option in SmartPLS 3).

The fixed seed uses a pre-specified seed that is the same for every execution of the algorithm. Thus, it produces the same results if the same number of subsamples are drawn. It thereby addresses concerns about the replicability of research findings.

References

- Bokrantz, J. and Dul, J. (2023). Building and Testing Necessity Theories in Supply Chain Management. Journal of Supply Chain Management, 59(1): 48–65.

- Dul, J. (2016). Necessary Condition Analysis (NCA): Logic and Methodology of "Necessary but not Sufficient" Causality. Organizational Research Methods, 19(1): 10-52.

- Dul, J. (2020). Conducting Necessary Condition Analysis. Sage: London.

- Hair, J.F., Sarstedt, M., Ringle, C.M., and Gudergan, S.P. (2024). Advanced Issues in Partial Least Squares Structural Equation Modeling (PLS-SEM), 2nd Ed., Thousand Oaks, CA: Sage.

- Hauff, S., Richter, N.F., Sarstedt, M., and Ringle, C.M. (2024). Importance and Performance in PLS-SEM and NCA: Introducing the Combined Importance-Performance Map Analysis (cIPMA). Journal of Retailing and Consumer Services, 78, 103723.

- Richter, N.F., Hauff, S., Kolev, A.E., and Schubring, S. (2023). Dataset On An Extended Technology Acceptance Model: A Combined Application of PLS-SEM and NCA. Data in Brief, 109190.

- Richter, N.F., Hauff, S., Ringle, C.M., Sarstedt, M., Kolev, A., and Schubring, S. (2023). How to Apply Necessary Condition Analysis in PLS-SEM. In J. F. Hair, R. Noonan, and H. Latan (Eds.), Partial Least Squares Structural Equation Modeling: Basic Concepts, Methodological Issues and Applications (pp. 267-297). Springer: Cham.

- Richter, N.F., Schubring, S., Hauff, S., Ringle, C.M., and Sarstedt, M. (2020). When Predictors of Outcomes are Necessary: Guidelines for the Combined use of PLS-SEM and NCA. Industrial Management & Data Systems, 120(12): 2243-2267.

- Sarstedt, M., Richter, N.F., Hauff, S., and Ringle, C.M. (2024). Combined Importance–performance Map Analysis (cIPMA) in Partial Least Squares Structural Equation Modeling (PLS–SEM): A SmartPLS 4 Tutorial. Journal of Marketing Analytics.

- More literature ...